Private Embedding

Vector Embeddings

Vector embeddings are numerical represenatations of unstructured data, such as text, images or audios and even the videos in the form of vectors. These embeddings capture the semantic similarity of objects by mapping them to points in a vector space, where similar objects are represented by vectors that are close to each other.

Example

For example, in the case of text data, “cat” and “kitty” have similar meaning, even though the words “cat” and “kitty” are very different if compared letter by letter. For semantic search to work effectively, embedding representations of “cat” and “kitty” must sufficiently capture their semantic similarity. This is where vector representations are used, and why their derivation is so important.

In practice, vector embeddings are arrays of real numbers, of a fixed length (typically from hundreds to thousands of elements), generated by machine learning models. The process of generating a vector for a data object is called vectorization. Weaviate generates vector embeddings using integrations with model providers (OpenAI, Cohere, Google PaLM etc.), and conveniently stores both objects and vector embeddings in the same database. For example, vectorizing the two words above might result in the following word embeddings:

cat = [1.5, -0.4, 7.2, 19.6, 3.1, ..., 20.2]

kitty = [1.5, -0.4, 7.2, 19.5, 3.2, ..., 20.8]

These two vectors have a very high similarity. In contrast, vectors for “banjo” or “comedy” would not be very similar to either of these vectors. To this extent, vectors capture the semantic similarity of words.

How to create vector embeddings?

-

Word-level dense vector models (word2vec, GloVe, etc.)

-

Transformer models (BERT, ELMo, and others)

Vector embedding visualization

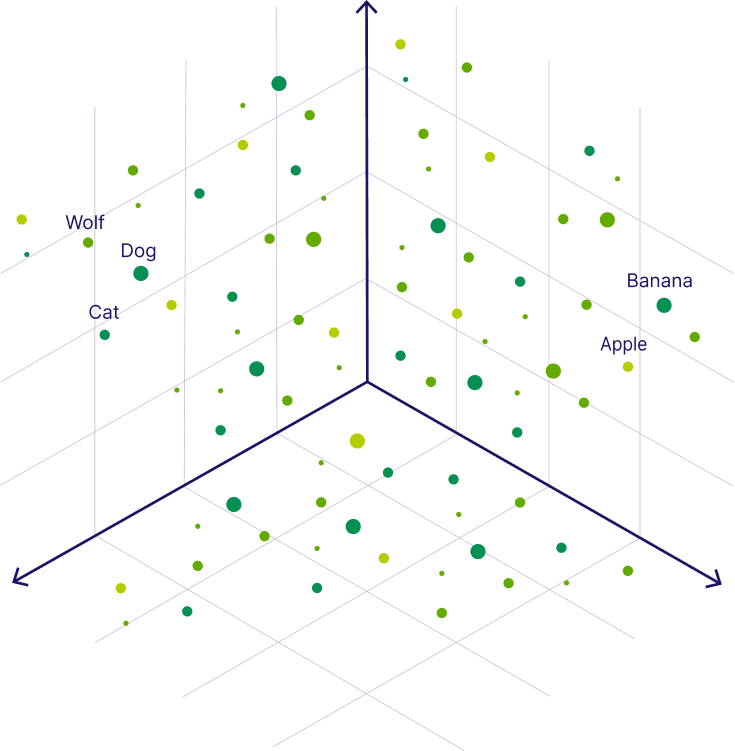

Below we can see what vector embeddings of data objects in a vector space could look like. The image shows each object embedded as a 3-dimensional vectors for ease of understanding, realistically a vector can be anywhere from ~100 to 4000 dimensions.

In the following image, you can see the vectors for the words “Wolf” and “Dog” are close to each other because dogs are direct descendants of wolves. Close to the dog, you can see the word “Cat,” which is similar to the word “Dog” because both are animals that are also common pets. But further away, on the right-hand side, you can see words that represent fruit, such as “Apple” or “Banana”, which are close to each other but further away from the animal terms.

Semantic Search

Vector Comparison